AI Risk and Threat Taxonomies

Posted on Di 05 August 2025 in security

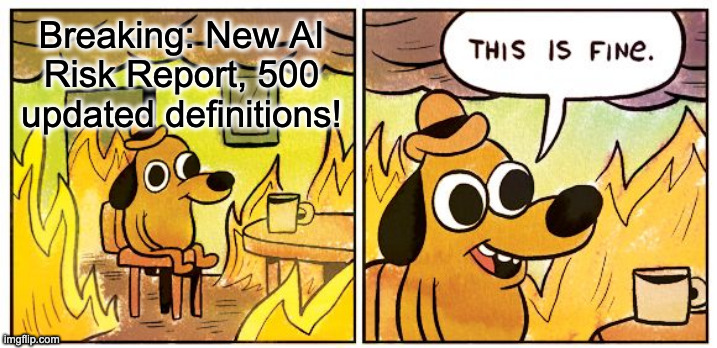

It seems like every week my LinkedIn feed is filled with new just released AI risk taxonomies, threat models or AI governance handbooks. Usually these taxonomies come from governance consultants or standards authorities and are a great reference for understanding the wide variety of risks AI systems1 bring with them.... but...

Often they are maddeningly impractical.

Let's say you're a governance or technical expert on a team and you get forwarded a 500-definition taxonomy with little to no categorization on: where the threat lies, how to implement controls and, most importantly, if this even applies to you. Where can you start with that document? For your mental health, I'd recommend closing your browser and making yourself a tea in the garden...

So, let's NOT stop making taxonomies, they are useful as a reference, but let's START making deeply practical approaches for people who actually work in governance, data and AI. These documents can help teams:

-

Prioritize which risks matter: Do you train your own models? No? Then for the love of the universe stop talking about data poisoning, it's not your problem! Instead, focus on figuring out which threats are actually relevant for the ways you are using AI/ML systems and then first only look at those relevant attacks and vulnerabilities.

-

Stop (just) citing papers and start red teaming and testing: Papers are great. I love papers, really, have you seen my book's citations? ;) But have you heard of papers with code? Work with technical team members to actually build out and test out a few attacks once you know which ones concern you.

-

Build out data governance infrastructure: Most organizations aren't training or hosting extremely large models themselves, but they are building tooling around these systems. Focus on getting data governance basics correct (documentation, tagging, cataloging, lineage and quality tracking) so that as your data/AI/ML maturity grows you've already covered the basics and you're ready to go.

-

Focus on system components and data access: Concerned about AI privacy and security? Focus on what data and documents the system has access to and how. Build protections just like you would for any data access. For example, removing potentially sensitive data sources from any data the AI system accesses is a great start.

-

Flex your multidisciplinary risk muscle: Not yet doing multidisciplinary risk assessment and evaluation? You're living in the past, bud! Yes, it'll "slow you down" and introduce new processes at first, but the benefits of faster releases, higher-quality, privacy-aware and secure products will definitely outweigh that initial friction.

Getting the ball rolling, even starting small is the most essential thing you can do for building more secure, more privacy-aware systems. Then, your ability to address all of those taxonomies grows with practice, platforms and systems that help you assess, manage and reduce the impact of new risks, threats and vulnerabilities.

Want more tips like this in your inbox? Subscribe to my newsletter or my YouTube channel to get the latest.

I'm curious: any other practical tips you have for folks to get started on AI system risk? Do taxonomies help you do your work; if so, for what work and how? And what are you doing outside of taxonomy work to address risk?

-

An AI system includes machine learning models, monitoring, evaluation, software, infrastructure/networking/hardware and data needed to run an AI-based product or service. ↩