Deep learning memorization, and why you should care

Posted on Mo 04 November 2024 in ml-memorization

When's the last time that ChatGPT parroted someone else's words to you? Or the last time a diffusion model you used recreated someone's art, someone's photo, someone's face? Has Copilot given you someone else's code without permission or attribution? If this happened, how would you know for sure?

In this article series, you'll explore how and why memorization happens in deep learning1, as well as what can be done to address the issues it raises.

However, to ensure it's worth studying, let's investigate if this phenomenon really occurs?

Memorization in the wild

NYT vs. OpenAI

NYT vs. OpenAI

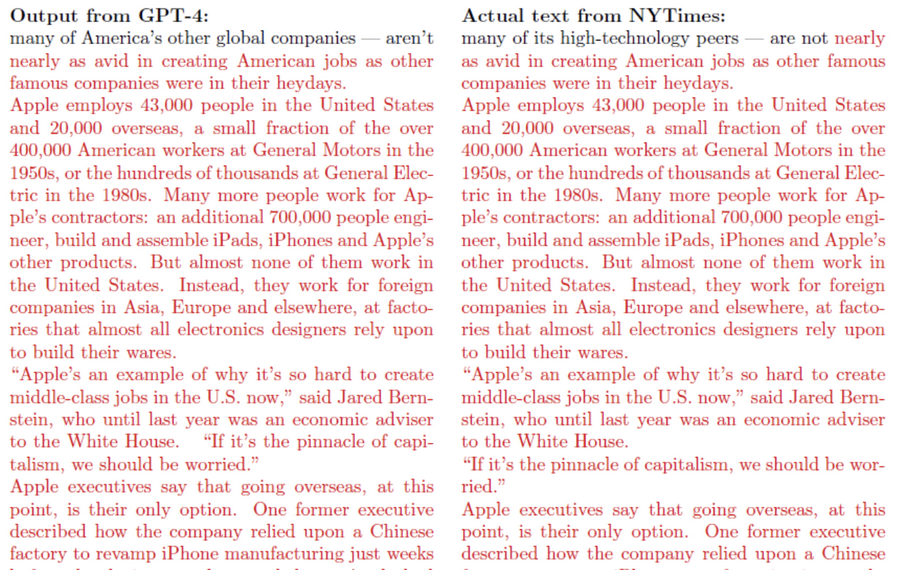

Here is a screenshot of an excerpt from the New York Times lawsuit against Microsoft and OpenAI. On the right is the original text of the New York Times article. On the left you can see the extracted text from GPT-4. If a word is red, it means it was directly repeated and therefore memorized by the deep learning model. Is this a violation of copyright law?

Stable Diffusion Face Extraction

Stable Diffusion Face Extraction

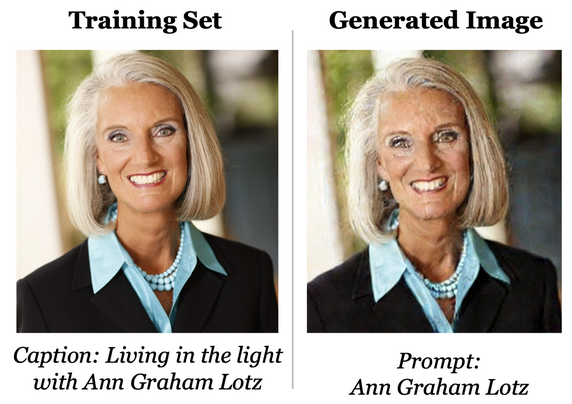

And here is an example from a stable diffusion model trained by Carlini et al. on Stable Diffusion's training dataset. This person's face is repeated less than three times in the training data. When prompted with the person's name, you can reproduce their face, or more specifically, the photo from the training dataset. Is this a violation of privacy?

Is OpenAI's Skye imitating ScarJo?

Link to watch above video

Is OpenAI's Skye imitating ScarJo?

Link to watch above video

Finally, here is an example of the new release of OpenAI's GPT-4o features, which originally started with a voice that sounded eerily like Scarlet Johansson's voice. Johansson had been approached several times by Sam Altman to be the voice of the new system but declined. Instead, it appears OpenAI found a voice actor to mimic her voice in order to give a cultural hat tip to her role in the movie Her, where she voiced the AI character.

What is happening here?

Understanding how deep learning systems work and succeed at tasks is an active area of research for more than a decade. In this series, you'll explore both the technical aspects of how these models memorize, but also the creation of a machine learning community culture that allows this to take place. You'll review the seminal research around privacy, security and memorization in deep learning, and better understand deep learning because of it. This knowledge will also help you better understand how to approach and use models and AI systems.

You'll start by looking at how datasets are collected and what their properties are, then, explore machine learning training and evaluation and the impact of those choices. You'll investigate what data repetition and novelty have to do with memorization, and how that can be mathematically modeled and proven. You'll learn the relations between overparameterization, model size and memorization and see some examples of how this phenomenon was discovered long before GPT models were released.

You'll also explore several ideas for how memorization can and should impact the way machine learning engineers manage data, the way models are trained, the way we talk about "intelligent systems" and how to reason about when to use deep learning.

But, why should I care about memorization?

As one person who I spoke with put it, "it doesn't really matter if a model memorizes, as long as it brings us closer to human-level intelligence". But is that true?

There is very little intelligence in merely saving a string of tokens or pixels and being able to repeat them when prompted. It is something that we humans are not so great at, but that is due to our intelligence, not in spite of it. Rote memorization is something computers have done for many decades and something they excel at.

This critique is echoed in the remarks from LeCun and many other deep learning researchers for several years now. The current way that practitioners train large language and computer vision systems are inherently linked to the training data and the limits within that data. These models can get quite good at mimicking the data, but it's heavily disputed if their performance shows deep reasoning, world models or systems thinking.

Memorization is not learning, even if it can mimic learning. If you want to build intelligent systems you'll have to do much better than memorization. And you'd need to prove that deep learning models are capable of significantly more than memorization and remixing. Based on what you'll learn around evaluation datasets, you'll likely have new questions for how machine learning practitioners review what is learned, what is generalizable and how the field might actually move forward towards better generalization.

There are additional reasons to care about memorization. Privacy is a fundamental human right according to the UN Human Rights Convention. The human right to self-determination about how information related to your personhood, your life and your behavior is collected, stored and used is a common understanding across many cultures, nations, lands and societies. As seen in the Second World War, how governments and technology systems collect, use, and proliferate data has a direct impact people's lives.

Privacy is closely related to trust, and how you manage your own privacy relates to who, how and what you trust. In this way, privacy mirrors social bonds that help keep society functioning, that help promote equality amongst persons and that create trust and accountability amongst ourselves and our institutions. When your trust in something is broken, you likely no longer want to share intimate details or data with such systems. And when your privacy is violated, for example, via online stalking or harassment, or even smaller examples, like a super creepy ad or a post that got shared out of context, you may feel violated. Your trust was broken.

Privacy isn't equally available to everyone -- despite common beliefs to the contrary. Some of us have what I call "privacy privilege", where your face is not stored in a database used by the police or state intelligence to track your movements. Some of us might represent the best outcomes in the models, where those systems work in our favor. For example, you are granted automatic entrance in an interview process or you get pre-approved for a loan. In those cases, your trust isn't violated by the system's usage. But there are many persons who do not fall into those categories - where these systems violate their privacy, their right to self-determination, their right to protect themselves from algorithmic classifications and categorizations.

Memorization in machine learning has deep implications in how to reason about choices in machine learning, and studying it can better expose phenomena like unfair outcomes, overexposed persons and how machine learning systems link to other systems of power and oppression in our world.

Memorization violates consent, erodes privacy and throws what all of us are being sold under the banner of "intelligence" into question. By exposing how memorization works, you are also pushing for more realistic views of AI systems and more realistic assumptions around how they can and should be used. You are also evaluating how they shouldn't be used. By studying memorization, you counter fraudulent messages on how machine learning works, and expose much more interesting fields of study based in real science.

Let's dive in!

I hope you're excited to learn more. In the coming articles, you'll explore how deep learning systems create the opportunity for memorization, along with a better understanding of how it happens.

To get a head start, if you already work in machine learning, I want you to reflect on the following:

- How do you collect data?

- How do you incentivize and optimize learning?

- How do you architect deep learning models?

- How do you govern data usage in ML systems?

The next article specifically investigates data collection, looking at how long-tail datasets create uneven distributions to be learned. To stay up-to-date, you can sign up for the Probably Private newsletter or follow my work on LinkedIn.

I'm very open to feedback (positive, neutral or critical), questions (there are no stupid questions!) and creative re-use of this content. If you have any of those, please share it with me! This helps keep me inspired and writing. :)

Acknowledgements: I would like to thank Vicki Boykis, Damien Desfontaines and Yann Dupis for their feedback, corrections and thoughts on this series. Their input greatly contributed to improvements in my thinking and writing.

-

In this series, you'll explore deep learning as a field, which includes the use and training of neural networks to perform a task or series of tasks. A large language model (LLM) is a particular type of deep learning model which can either produce text (just like a normal language model), or answer chats with instructions or prompts, which is what ChatGPT does. You'll learn more about how these systems work from small building blocks (neurons and layers) to the entire model by studying how they are built, trained, evaluated and used. ↩